AI as teammate: The human systems approach

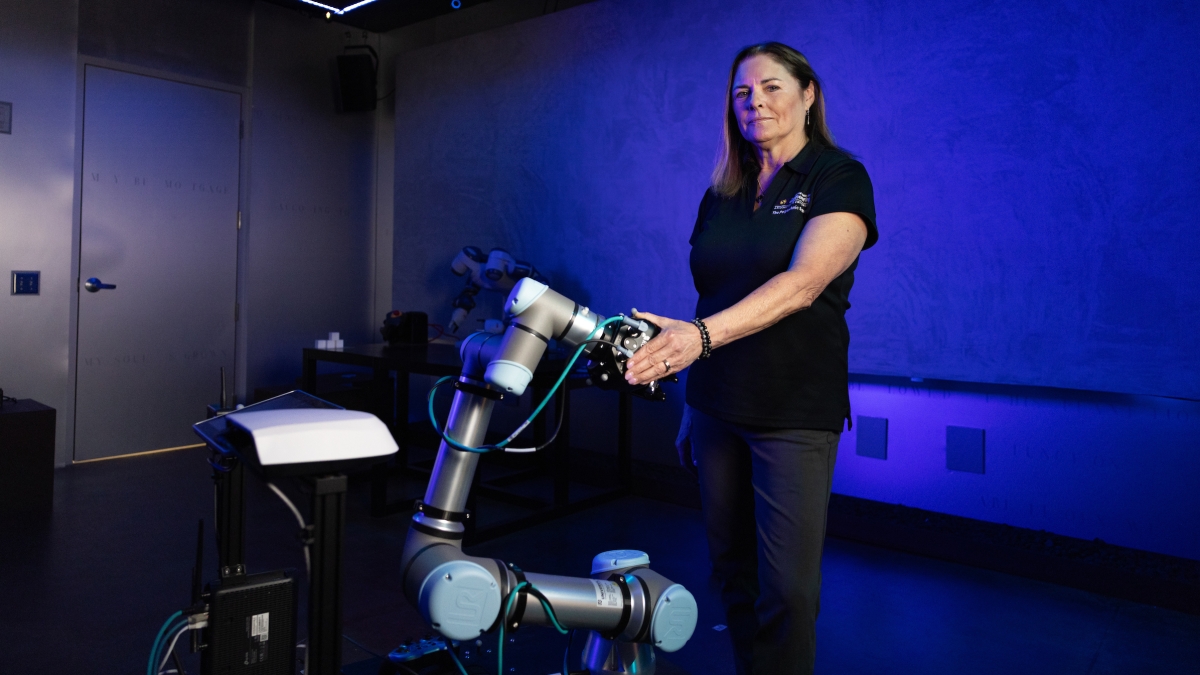

Nancy Cooke, ASU human systems engineer and senior scientific advisor of the Global Security Initiative’s Center for Human, Artificial Intelligence, and Robot Teaming, stands next to a Robotiq machine in the GHOST Lab at ASU's Polytechnic campus. The GHOST (General Human Operation of Systems as Teams) Lab is a scientific test bed. Photo by Samantha Chow/ASU

Editor's note: This expert Q&A is part of our “AI is everywhere ... now what?” special project exploring the potential (and potential pitfalls) of artificial intelligence in our lives. Explore more topics and takes on the project page.

For Arizona State University researcher Nancy Cooke, artificial intelligence isn’t just about technology — it’s really about humans.

“I have to keep reminding technologists that humans are important, too,” said Cooke, founding director and senior scientific advisor of the Center for Human, Artificial Intelligence, and Robot Teaming, which houses the GHOST (General Human Operation of Systems as Teams) Lab, a scientific test bed that is also an art installation.

“We get really enamored by glitzy, shiny objects, and the robots and AI — even in this lab, the GHOST Lab, we could see how cool the robots are, but don’t forget that they're meant to work alongside humans, and so they have to be functional in that regard. So human systems engineering has really taken off with this idea of having robots and AI serve as teammates alongside humans.”

Cooke — a professor of human systems engineering in the Polytechnic School, part of the Ira A. Fulton Schools of Engineering — has studied human teams for over 30 years. Most recently, she has been exploring the role of AI and robot technology as part of those human teams. Here, she speaks with ASU News about that research.

Editor’s note: Answers may have been lightly edited for length and clarity.

Question: Can you talk a bit more about the concept of AI as a teammate?

Answer: AI can be a good teammate as well as a bad teammate. And by teammate, I mean that it’s got its own roles and responsibilities, and I really don’t believe in replicating human roles, responsibilities or capabilities in AI because we can do them just fine ourselves. Maybe in some situations where it's dull, dirty or dangerous, you want to send the AI in. But for the most part, I think AI should be used to complement the skills that we already have.

I’ve been really intrigued with the centaur model, which came out of chess research. If you put a pretty good chess program or chess AI together with a pretty good chess master, it went on — in that case — to beat Garry Kasparov, the best grandmaster of them all, and the best chess program of them all.

What I'm trying to do is figure out ways that we can make centaur-type teams — put the best capabilities of AI and the best capabilities of humans together to make a superhuman team. I'm also working with Heather Lum, who’s here (in ASU’s Polytechnic School), and she’s a search-and-rescue dog trainer. And so another example of centaurs — maybe it’s something other than a centaur — is to put dogs and marine mammals as teammates on teams.

There was some work done by Scientific Systems Inc. that put a dog on a search-and-rescue team with a human and a drone. The dog was outfitted with some sensors, and the drone could control it to tell the dog where to go because the drone could see further. And the human was kind of watching all of the feed that comes back from the dog. Together, they all made a good team. So put the best of these things together and see what happens.

Q: What does it mean for these teams to be effective?

A: In our group, we’ve been doing a lot of work on measurement, and can we take signals off of these very complex systems, sometimes with lots of technology and lots of humans, and understand from moment to moment how effective they are, and understand in these really complex systems where sometimes (there are) failures? We’ve done some work for the Air Force/Space Force to look at complex, distributed space operations in this way, where you have robots and AI and humans in a very distributed system.

And how can we refine our measurement techniques to understand what’s going on in the system and where our attention, the human’s attention, needs to turn because something’s happening in one part of the system?

One of the things that we need to get this research done is test beds — both physical test beds like the GHOST Lab and virtual test beds. We make heavy use of Minecraft to build virtual test beds in which we can put humans and machines together to test their effectiveness and to look at different ways of maybe having them interact. In this GHOST Lab, we can bring humans in, have them interact with the robots in different ways and see which way is most effective.

I think we have to assume that we’re building technology for any person to be able to use. So in human systems engineering, we will take the end user into account and try to design the system so that ... particular user can use the system without extended training, without too much effort, and use it safely.

Q: How key is it that people understand AI?

A: AI literacy is very important. It should be the job of ASU and professors at ASU to teach AI literacy to all the students here. I think that comes at different levels depending on what your role is with AI. Are you the one that’s going to be programming AI, or are you just a user of AI? Even with ChatGPT, I think there's a lot of room for AI literacy and how you use ChatGPT in a way that produces something that’s accurate and not plagiarized.

I want to reinforce the idea that this work is so multidisciplinary, transdisciplinary — you can't do this with one discipline. So human systems engineering is just one discipline but sometimes the discipline that’s forgotten when we're out developing AI. ... Humans should be at the center. But we can't do it alone. We need people who are well versed in AI and robotics and technology in general, as well as the end user.

Q: A lot of people are worried about the effects of AI. How do you see that?

A: There’s sort of two scenarios that people think will play out. AI will take our jobs and kill us in the middle of the night — I’m definitely hoping that that’s not the scenario.

I think we have to work really hard to imbue humans with superhuman capabilities by well-thought-out use of AI and robotics. And so will people still be needed? I'm on a debate coming up here in September about do we still need humans at work? And I say, yes.

We will probably be much more productive at work. Maybe we'll get four- or even three-day workweeks because of AI and robots. To me, that’s the happy scenario that I’m hoping for. That’s exactly what the center is all about: human-centered AI, and the way that we make it human centered is by assembling good teams of humans and AI.

AI is everywhere ... now what?

Artificial intelligence isn't just handy for creating images like the above — it has implications in an increasingly broad range of fields, from health to education to saving the planet.

Explore the ways in which ASU professors are thinking about and using AI in their research on our special project page.

More Science and technology

Transforming Arizona’s highways for a smoother drive

Imagine you’re driving down a smooth stretch of road. Your tires have firm traction. There are no potholes you need to swerve to avoid. Your suspension feels responsive. You’re relaxed and focused on…

The Sun Devil who revolutionized kitty litter

If you have a cat, there’s a good chance you’re benefiting from the work of an Arizona State University alumna. In honor of Women's History Month, we're sharing her story.A pioneering chemist…

ASU to host 2 new 51 Pegasi b Fellows, cementing leadership in exoplanet research

Arizona State University continues its rapid rise in planetary astronomy, welcoming two new 51 Pegasi b Fellows to its exoplanet research team in fall 2025. The Heising-Simons Foundation awarded the…