With help from AI, ASU researcher develops models to address climate change, other global issues

Arizona State University Assistant Professor Hannah Kerner is developing machine learning tools that can yield actionable information from vast amounts of largely untapped data continuously captured by satellites in orbit around the Earth. Photo by Samantha Chow/Arizona State University

Hannah Kerner ponders planetary problems: How can we better adapt to and mitigate climate change? How can we improve the quality of human life without exceeding the resource budget of the Earth? How can we ensure that the capabilities of new technology move society toward greater equity?

Kerner is an assistant professor of computer science at Arizona State University and she believes artificial intelligence is a means to address many of these global challenges. Not least because we already have the data needed to do so.

“We collect enormous amounts of data from sources including orbiting satellites and ocean sensors. We capture hundreds of terabytes every day and we have done so for decades,” says Kerner, a faculty member in the School of Computing and Augmented Intelligence, part of the Ira A. Fulton Schools of Engineering at ASU. “But now we can take these measurements and process them with AI to give us actionable information. We can enable decisions that yield real-world solutions.”

Kerner speaks with principled purpose, but also with practical experience and proven success. She leads the development and deployment of AI and machine learning tools for the NASA Acres and NASA Harvest consortia, which apply satellite observations to tackle pressing agricultural and food security challenges in the U.S. and worldwide.

Lacking labels

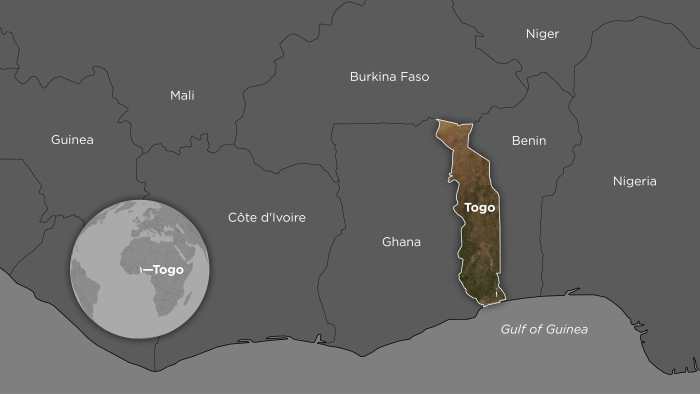

The powerful potential of this work was demonstrated in 2020 through a project request from the West African country of Togo. The COVID-19 pandemic had disrupted supply chains and prevented farmers from getting necessary agricultural supplies as well as selling their crops at market. In response, the Togolese government wanted to disburse aid funds to smallholder populations across the country, but demographic records were insufficient for the task.

“The need was urgent so they asked whether our satellite data could help to quickly fill that gap. Could we identify cropland as a proxy for smallholder populations to help the government determine how to appropriately allocate funding across different districts?” Kerner says. “And could we do it within a week?”

Togolese authorities had already sought support from other sources, but no solutions emerged because they lacked adequate “labeled data,” which are tagged examples that serve as points of reference to train AI models.

If an algorithm is supposed to correctly identify visual data representing an agricultural field, it generally needs to be shown an enormous number of verified samples. These were not available in Togo.

Fortunately, Kerner and her research group had been working on methods to tackle exactly this kind of real-world problem with little or no labeled data. They applied the machine learning methods they were developing to create a cropland classification map of Togo with 10-meter-per-pixel resolution.

“That means every 10-meter-by-10-meter area of ground was marked as being either cropland or not cropland,” Kerner says. “It was the highest resolution agricultural map ever made of the country, and that clarity provided a crucial information source for the government to distribute necessary aid to more than 50,000 farmers.”

Covering the distance

Lack of labeled data is a common challenge to interpreting remote-sensing data worldwide. The reason is that creating labels is slow and expensive, especially in vast and remote agricultural regions.

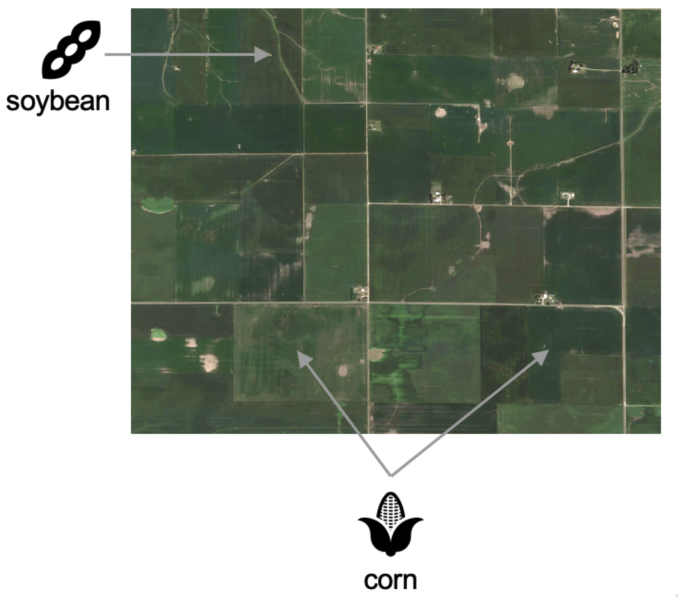

“You can’t just look at a satellite image and determine what is growing at a given site,” Kerner says. “For example, a field of corn and a field of soybeans are going to appear the same to our eyes in that image.”

Someone needs to physically visit each location to record what is being cultivated. Also, identifying crops requires specialized knowledge beyond the training of most computer scientists and their students. Accurate labels therefore require informed input from farmers or agricultural authorities, which in turn requires time to establish relationships and trust with them.

Kerner and her colleagues, including agricultural remote sensing expert and NASA Harvest Africa lead Catherine Nakalembe, wanted to create a faster way to gather this ground-level, or “ground-truth,” labeled data.

They developed a method called Street2Sat in which GoPro cameras were mounted on cars and motorcycles driven on roads alongside farm fields to capture geotagged and timestamped images, similar to Google Street View. These images were then supplied to computer vision algorithms to automatically detect crops and create a ground-truth crop type label that could be paired with corresponding satellite data for each location.

“Most of automating how we processed these surveys went as expected. But the difficult part was managing the distance offset,” Kerner says. “We didn’t want location data for the vehicle-mounted cameras on the roads. We wanted to record the locations of the crops that were photographed by those cameras.”

Kerner says off-the-shelf computer vision models for depth estimation do not work very well for open environments where objects might be far away. She says they work for objects less than 4 meters from a camera, but beyond that range — such as 10 to 12 meters away, as crops often are from a road — the models generated significant errors. Kerner and her team tried a variety of approaches to correct that problem, but without success.

“Then we took a step back and asked what we were really trying to do here — as human beings — to solve this problem,” she says. “We’re looking in Google Earth at where this camera is located on a road, and we know it’s pointed in ‘this’ direction. It’s ‘this’ field that we want the model to predict. So maybe we should just have the model solve that specific problem.”

They then trained a segmentation model to use an image with an arrow on it to predict which field it was pointing out, and it turned out to be a successful approach. Enough so that the team has now used their Street2Sat pipeline to process more than 5 million images from countries including Kenya, Nigeria, Rwanda, Tanzania, Uganda and the United States.

LLM to LEM

This process of speeding up the rate of data collection is one way around the roadblock of insufficient labels. Another way is to learn more efficiently from the labels that are already available so that relatively few of them are needed to learn a new task. That latter approach is called few-shot learning.

“In computer vision work, we typically take some pre-trained model and fine tune it to perform a new task for which we have just a small amount of data,” Kerner says. “Unfortunately, there is no pre-trained model for satellite remote-sensing data.

"People have tried, but it hasn’t worked well because there are very limited numbers of labels for model training. They’re just not representative of all the image patterns we see across the planet, and that yields very biased or incorrect results.”

But there has been recent, high-profile progress with models using huge amounts of unlabeled data to accomplish self-supervised pre-training. They use text from the internet rather than satellite data, and they are known as large language models or LLMs. The most prominent example is ChatGPT.

Kerner believes that if computer science can succeed with LLMs, it can succeed with what she is calling LEMs or Large Earth Models. And this is exactly what she and her colleagues are seeking to do now.

“We want to apply the huge amounts of multidimensional satellite data we are constantly collecting to train models in a self-supervised way,” she says. “Doing so means we could use those models as a starting point to fine tune for many different tasks.”

Kerner is thinking of much more than crop type identification. She points to classifying forests, monitoring deforestation, detecting methane emissions, tracking weather systems, counting livestock and more. She says these capabilities are all possible through the development of AI tools operating at planetary scale.

Metrics that matter

Toward these ends, she and her team have designed and continue to refine a model called Presto, which stands for pre-trained remote sensing transformer. She says it reflects the nuances of working with remote-sensing data as compared to language processing, and it respects the realities of real-world application.

“We have deliberately used a wide variety of input sensors during pre-training. We don’t want to require the use of one specific satellite or type of satellite,” Kerner says. “We also integrated what is called structured masking so that the model can work around missing data.”

They have additionally employed time series data instead of spatial inputs, which makes Presto very compact in terms of the number of parameters it uses — about 1,000 times smaller than other models. This lean design enables what is known as large-scale inference or the ability to efficiently make predictions for millions of data samples to form a meaningful map of a region, a country or the entire world.

Presto’s development also employed globally representative pre-training data. So the model has been effective when evaluated on tasks involving different domains, different geographies, different scales of data and more. Consequently, it’s already being used by NASA, the European Space Agency and companies operating in multiple fields.

Kerner says this real-world deployment is key to continued success with AI research. Simply training models in labs and reporting results in peer-reviewed journals is insufficient to advance performance and impact.

“This deployment also means we need interdisciplinary teams to do the work. We need diverse collaborations from different fields,” she says. “We also need to co-develop these solutions with end users. Even when we’re doing fundamental machine learning research, we need to talk with the people who ideally will use the tools we develop. They need to tell us their needs.

"Ultimately, they are the metrics that really matter.”

More Environment and sustainability

Public education project brings new water recycling process to life

A new virtual reality project developed by an interdisciplinary team at Arizona State University has earned the 2025 WateReuse Award for Excellence in Outreach and Education. The national …

ASU team creates decision-making framework to improve conservation efficiency

Conserving the world’s ecosystems is a hard job — especially in times of climate change, large-scale landscape destruction and the sixth mass extinction. The job’s not made any easier by the fact…

Mapping the way to harvesting water from air

Earth’s atmosphere contains about 13 trillion tons of water.That’s a lot of water to draw upon to help people who are contending with drought, overtaxed rivers and shrinking aquifers.In fact,…