As autonomous vehicles continue to advance at an unprecedented pace, ensuring their safety is a main concern shaping the future of transportation.

This multibillion-dollar industry has attracted many companies to Phoenix, which is seen as an ideal test bed for this technology because of its consistent weather, grid-like road system and relatively low levels of traffic compared with other major metropolitan areas. Waymo has been testing autonomous vehicles in the Valley since 2017 and could shortly be followed by Apple and Tesla as they also work to develop their own autonomous vehicle technology.

As people become more aware of this industry and autonomous vehicle technology, questions continue to rise about its safety and trustworthiness.

Faculty members in the School of Computing and Augmented Intelligence and The Polytechnic School, both part of the Ira A. Fulton Schools of Engineering at Arizona State University, are working to address these concerns with research spanning multiple components of autonomous vehicle advancement — from traffic monitoring and data collection to improving the software and mechanics of the vehicles themselves.

Understanding driving conditions

Researcher Yezhou “YZ” Yang, an associate professor of computer science and engineering in the Fulton Schools, is working to understand traffic through monitoring and data collection to inform autonomous vehicles.

Yang’s background in artificial intelligence and computer vision research through his Active Perception Group inspired him to co-found the company ARGOS Vision. There, he has developed an inexpensive, integrated hardware and software solution for traffic counting, or gathering data about vehicle and pedestrian traffic passing through intersections. Through roadside cameras installed at intersections, Yang can track and model estimates on the speed, acceleration and deceleration of vehicles, in addition to various scenarios at intersections like pedestrian behaviors and the yellow light dilemma.

Yang notes that companies can use this traffic-counting data to inform autonomous vehicles in their decision-making, thus contributing to safer intersections and more efficient city planning.

“We’re also exploring how to use existing infrastructure cameras and AI to be able to track and monitor autonomous vehicles on the road, ultimately scoring the vehicle behaviors on a variety of factors to inform autonomous driving vehicle companies on how they are performing,” he says, noting that this can contribute to the technology already present on our roadways performing more reliably.

For the past five years, Yang has been working with the Institute of Automated Mobility, an Arizona-based consortium bringing together experts from private industry, government and academia to guide and deploy safe and efficient automation.

One of these collaborators is Yan Chen, an assistant professor of mechanical engineering in the Fulton Schools, who is also working to establish reliable control and safety of automated and human-driven vehicles. Chen brings expertise in design, modeling, estimation, control and optimization of dynamic systems.

“Vehicle dynamics and control play a significant role in this research because many people are only focused on sensing and perception, or helping the vehicles understand their surroundings,” Chen says. “When humans are the decision-makers in a vehicle, there are more factors to keep in mind relating to how the vehicle will or is able to respond to humans’ commands.”

Refining the vehicles

Chen also furthers this research in his lab at ASU, the Dynamic Systems and Control Laboratory, where he and his team explore automotive systems and automated driving, among many other research areas.

One of their research goals is to achieve multivehicle autonomous coordination, which Chen says promotes “safety and transportation efficiency while also improving energy efficiency in vehicles sitting in traffic.”

Outside of his efforts with Yang in the Institute of Automated Mobility, Chen is working on safety metrics to evaluate the safe distance or stopping distance and reaction time needed when autonomous vehicles must come to a complete stop quickly.

“With human drivers, there is a level of justifying what happened and having to decide on an appropriate reaction,” Chen says, noting that autonomous vehicles have a much faster reaction and action time than human drivers.

Aviral Shrivastava, a professor of computer science, is assessing human driving decisions and how they translate to autonomous vehicles.

Shrivastava is taking a closer look at the software in autonomous vehicles, exploring motion planning algorithms for safety in hybrid traffic, or traffic that contains both autonomous and human-driven vehicles.

The results of his latest research, which was published in the IEEE Transactions on Intelligent Vehicles journal, show that every driver takes an assumed risk when getting behind the wheel or into a vehicle, but autonomous vehicles following his motion-planning algorithm are “blame free” in the event of a car accident.

Shrivastava notes that “blame free” is the preferred term to describe this technology rather than “safe” because operating in a hybrid traffic environment can never guarantee safety, as human drivers bring the potential for distracted driving, split-second decision-making and more.

“In every car accident, each driver receives a certain percent of the blame,” Shrivastava says. “With our motion-planning algorithm, we’re working toward autonomous vehicles receiving 0% blame in the event of an accident, meaning they will not contribute any errors in their driving.”

Shrivastava has created open-source software to share this algorithm with companies, which he says allows the research to make a bigger impact and be directly implemented into autonomous vehicles.

On the vehicle hardware side, Abdel Mayyas, an associate professor of automotive engineering in the Fulton Schools, is working on improving the functional safety, reliability, motion planning and control of autonomous vehicles.

“We noticed that companies will expedite the process of commercialization and deployment of autonomous vehicles by doing their testing close to the deployment in real-world scenarios, which can be risky and costly,” Mayyas says.

ASU’s EcoCAR3 project, led by faculty advisor Abdel Mayyas, allowed students to redesign a Chevrolet Camaro into a hybrid-electric vehicle through the U.S. Department of Energy Advanced Vehicle Technology competition series, conducted in partnership with General Motors. Photo courtesy Abdel Mayyas

To combat this issue, Mayyas and his team are modifying the industry’s existing vehicle test bed to enable a new research capability, conducting their tests in the lab environment to evaluate extreme variability and special dynamics in a more controlled and cost-effective environment. This kind of testing has historically been used on traditional vehicles but is more complex to apply to autonomous vehicles without human intervention.

“On the test bed, which acts like a treadmill, we’re exploring how vehicles maneuver to the left and right, not just forward and backward, which involves a lot of physics and is quite complicated,” Mayyas says. “Being able to test autonomous vehicles in this way would help us better evaluate how they perform in extreme scenarios and with unexpected events.”

On the safe side

In the continued effort to safeguard autonomous vehicles, Junfeng Zhao, an assistant professor of engineering in the Fulton Schools, is working with the Science Foundation Arizona to evaluate and improve the safety of different levels of automation that are currently in use.

“With companies like Waymo and Cruise already operating autonomous vehicles in Phoenix, it’s imperative that we evaluate the safety-related issues that the new technology may impose on the public,” Zhao says.

He is designing a comprehensive methodology to assess autonomous systems and their safety levels, which can be used as a third-party evaluation method.

Zhao notes that state and federal governments currently do not have a standardized method to evaluate the safety of autonomous vehicles. With this project, his goal is to provide a framework to fill the gap.

“Before we can persuade developers to adopt our methodology, we want to demonstrate it with a pilot study conducted on our research platform to validate the methodology we’re proposing,” Zhao says, noting that the study uses digital twin technology and augmented reality to simulate traffic scenarios for vehicle testing.

Zhao hopes to see the public, state governments and even the federal government adopt this as a standard practice in the future and impose regulations to ensure the safety of autonomous vehicles on the road — not just for the drivers and passengers, but everyone in the community.

In the Battery Electric and Intelligence Vehicle Lab, Junfeng Zhao and his students have transformed a 2022 Ford Mustang Mach-E into an autonomous vehicle, equipped with radar, an RGB camera, front and rear 360 lidar and a computer and instrumental rack. Photo courtesy Junfeng Zhao

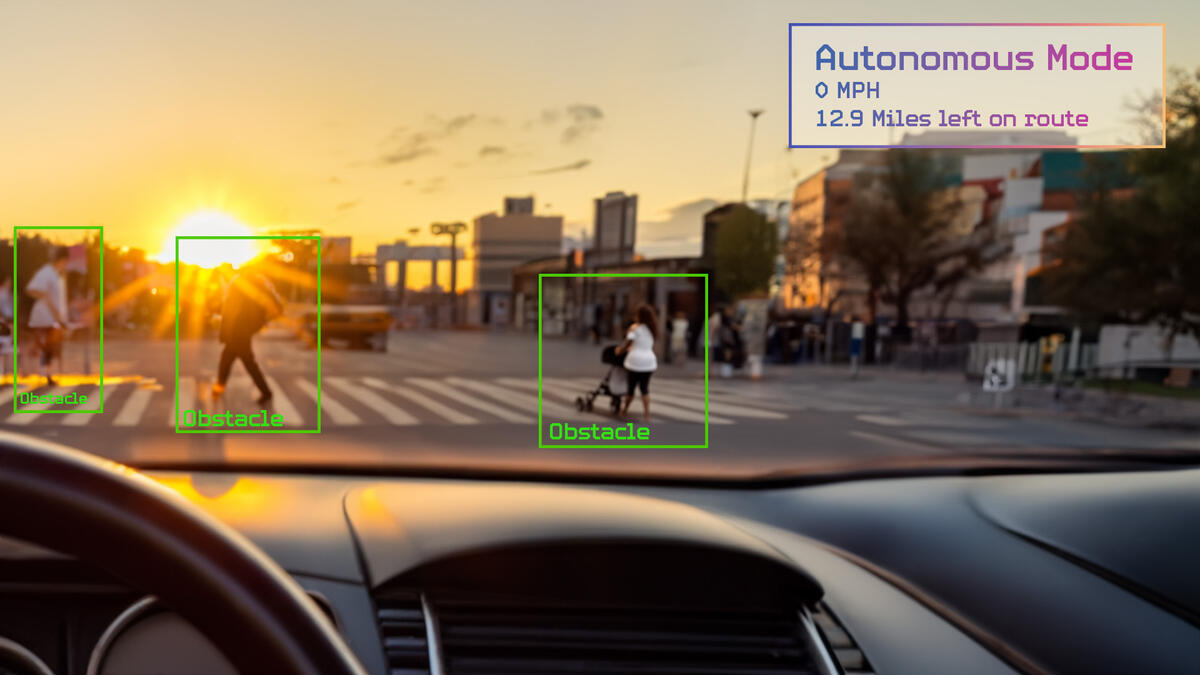

Top graphic generated using Adobe Firefly by Erika Gronek/ASU.

More Science and technology

ASU to host 2 new 51 Pegasi b Fellows, cementing leadership in exoplanet research

Arizona State University continues its rapid rise in planetary astronomy, welcoming two new 51 Pegasi b Fellows to its exoplanet research team in fall 2025. The Heising-Simons Foundation awarded the…

ASU students win big at homeland security design challenge

By Cynthia GerberArizona State University students took home five prizes — including two first-place victories — from this year’s Designing Actionable Solutions for a Secure Homeland student design…

Swarm science: Oral bacteria move in waves to spread and survive

Swarming behaviors appear everywhere in nature — from schools of fish darting in synchrony to locusts sweeping across landscapes in coordinated waves. On winter evenings, just before dusk, hundreds…