Robotics may be the strangest of the hard sciences.

It’s incredibly old: Chinese artisans made humanoid figures that could sing and dance in the 10th century B.C. Alexandrian engineers built automata used in theater and religious ceremonies, as well as toys for the rich. Arabs took Greek robotic science and added practical applications. In the 12th century, a Muslim engineer named Ismail Al-Jazari built a waitress that could serve drinks.

It’s incredibly hard: Making a humanoid machine that can think and work on its own is a long way off. People will be living on Mars long before we see a robot anything like what we see in the movies. Roboticists joke that rocket science is a piece of cake compared to robotics, and they’re right. The Abraham Lincoln Audio-Animatronic at Disneyland that recites the Gettysburg Address is basically where the field is — and that’s been around since 1964.

It challenges philosophy: Robotics asks fundamental questions about the nature of humanity. Roboticists have to teach their machines things humans don’t even teach children, because children learn a lot on their own through observation — something robots can't do.

One hundred years ago this week, a play by Czech writer Karel Čapek called "R.U.R." debuted on Jan. 25. It introduced the word “robot” to the world. The drama takes place in a factory where artificial humans are manufactured. (The robots revolt, as they do so often in popular culture.)

In this story, we will look at where robots are now, what the field’s future is and the obstacles that lie in the way. Arizona State University has a multitude of people working in 25 labs on facets of robotics including artificial intelligence, rehabilitation, exoskeletons, foldable robots, collective behavior, human-robot collaboration, brain-machine interfaces and more.

They are mechanical engineers, electrical engineers, computer scientists, applied psychologists and human systems engineers. Ask most of them and they will say it all overlaps.

Back before the Arab, Greek and Chinese automata, robots and working machines appeared in ancient mythology. People have been captivated by the idea of creating mechanical facsimilies of themselves for a very long time. Why?

Heni Ben Amor directs the Interactive Robotics Laboratory at ASU. Originally he wanted to be an animator, creating lifelike characters on screen. But in grad school he worked with robots. “I went in a completely different direction,” he said.

Now he studies artificial intelligence, machine learning, human-robot interaction, robot vision and automatic motor skill acquisition. He has led several international and national projects as a principal investigator, including projects for NASA, the National Science Foundation, Intel, Daimler-Benz, Bosch, Toyota and Honda. Two years ago he received the NSF CAREER Award — the organization's most prestigious award in support of early-career faculty. Six assistant professors and one associate professor at ASU, all in robotics, have won the award.

“I think humans have this intrinsic motivation of actually creating stuff,” Ben Amor said. “And for a lot of us creating something that's similar to us, like another human being, is really kind of the hallmark of what we can achieve. … Certainly this drive to create something that's similar to us seems to be innate. It's not something that we came up within the last couple hundred years.”

Video by Deanna Dent/ASU Now

Ben Amor speculates it’s the same impulse that led early man to paint the walls in caves.

“The Czech play is a milestone in a long arc of development,” he said, citing the early builders of automata. “So the marking of the word is not necessarily the beginning. It's kind of a sort of focusing, bringing all of these ideas, narrowing them down to these five letters. And I think that's what it's about. It kind of focused everything to these five letters and then people took those five letters and created this plethora of many, many different ideas out of that again. So, it's kind of conceptualized it, made it a little bit clearer that there's a domain behind this.”

A few weeks ago, attentions were captivated by a video of robots created by Boston Dynamics dancing to The Contours’ “Do You Love Me?” It was impressive, showcasing robot dexterity and how physical systems are improving. Microprocessors and computing power have grown by leaps and bounds. The video has nearly 27 million views.

But while it’s impressive, the Boston Dynamics robots are essentially sophisticated marionettes. Fundamentally they’re not any different than Al-Jazari’s 12th-century waitress or Walt Disney’s Abe Lincoln. They can only do one thing.

Here’s what they can’t do: Wash a sink of dirty dishes containing fine china plates, crystal stemware and a lasagna pan caked in baked-on crud without breaking most of it. They can’t go into any house and fix a water leak. They could deliver a package, but not walk up to the front door and let you know it arrived. And they can’t make any decisions on their own.

People and robots

“The field of robotics in general is still in its infancy and in particular human robot interaction is still at a very early stage,” said Wenlong Zhang, an assistant professor of systems engineering and director of the Robotics and Intelligent Systems Laboratory. Zhang and his team work on design and control of advanced robotic systems, including wearable assistive robots, soft robots and unmanned aerial vehicles. They also work on the problem of human-robot interaction.

Right now, most working robots sit in factories doing repetitive tasks all day long. They don’t have much intelligence. If you’ve ever seen an industrial robot at work, you know it’s nothing you’d want to get close to while it’s operating.

“For many of these conventional robots, you literally have to tell them the trajectory and the many new applications that we're looking at,” Zhang said. “It's not possible. … Robots need to have not only high precision and power, but also need to be adaptive. They need to be inherently safe. They need to understand the intent of their users. So human robot interaction is basically a very big umbrella that covers these topics.”

Machine intelligence is a broad field with a lot of people working on it. The ultimate goal is to make sure robots can be reliable partners. The knot of the problem arises in teaching machines things parents don’t even teach children explicitly, things they learn on their own, like speaking or picking up things.

“That's why many researchers, especially in psychology and machine learning, are trying to understand how infants learn and they are trying to apply these to robots,” Zhang said. “This is a challenging problem. … Robots now work with humans, and humans are inherently adaptive and stochastic. … Let's say if we have this conversation again, I probably am not going to say the exact same thing. … If you think about robots being your partner, they have to understand this.”

Computer science master’s degree student Michael Drolet gives the "hugging robot" a hug in the Interactive Robotics Lab on Oct. 23, 2020. Heni Ben Amor’s lab focuses on machine learning, human-robot interaction, grasping manipulation and robot autonomy with the hugging robot "learning" to interact with humans. Photo by Deanna Dent/ASU Now

How can I help you?

Ask Ben Amor how long it takes to program a robot.

“Six years.”

Six years?

“First you have to get the PhD,” he said.

Remember early personal computers? They were a bear to set up and operate. The early internet wasn’t much better. This video player didn’t work with that browser and vice versa. No one surfed the net. They kind of stumbled around in it. Today personal computers (and tablets and smart phones) are a breeze. You don’t have to know how they work.

But with robots and artificial intelligence today, you have to be a computer scientist. Sometimes even that’s not enough.

Siddharth Srivastava is an assistant professor and director of the Autonomous Agents and Intelligent Robots lab. Srivastava and his team research ways to compute the behavior of autonomous agents, going from theoretical formulations to executable systems. They use mathematical logic, probability theory, machine learning and notions of state and action abstractions.

“Undergrads who join our lab have to go through one or two years of training before they can actually change what a robot does,” Srivastava said. “In fact we often have undergraduate students — or let's say first-year master's students who've already done their undergraduate program in computer science, it takes them about a year after knowing all that, to just start configuring the robot on their own for a new task. It's easy to hit replay and make it repeat whatever it has been programmed to do. But that's not the promise of AI, right? The promise of AI is that you can tell it what you want and it will do that stuff for you in a safe way. … To give that instruction, you actually need to have a lot of expertise.”

Srivastava tackles two lines of research in his lab.

First, how can autonomy be made more efficient. You have a household robot and you need to give it a new task. What kind of algorithms do you need to make it more adept at that?

In the second, you have an AI robot or an autonomous assistant. You don’t know much about it. (Srivastava expects this will be the vast majority of users.) “How do you interact with it?” he said. “How do you change what it's doing? How do you rectify it? How do you understand what it's doing?”

What he has found is that in both dimensions some common mathematical tools dealing with abstraction and hierarchies — and how they are used — help solve those problems.

“So on the computation side, we are looking at how to use hierarchical abstractions to make it easier for AI systems to autonomously solve complex problems,” he said.

Frying an egg isn’t too tough for a robot to pull off, but it would have serious issues if you just asked it to make you breakfast. Where does it start? What does it do first?

Subbarao Kambhampati has been researching robot task planning his entire career. He is a computer scientist with expertise in artificial intelligence, automated planning and information integration. For the past 10 years he has been working on how robots can work with humans.

When you book a flight online, the bot you use (which Kambhampati calls "a piece of code living on the sidewalk on the internet") will offer you a range of times and prices. What if it did more than that? What if it anticipated what you want and helped you, instead of just taking a request?

“So in general, my work is how to get these robots, our bots, to be our partners, rather than just our tools,” he said.

Teaching robots to teach themselves, via many simulations, is streamlining task planning. Children do that by picking up everything they see around them. Eventually they figure out: This is heavy, that is smooth, these break. No one specifically teaches them these things.

The Boston Dynamics robots didn’t learn to jump by jumping a million times on their own. That’s what Kambhampati is talking about.

“Much of what changed in AI in general in the last 10 years is these tacit knowledge tasks that we didn't know how to tell robots, because basically anything that we do would only be an approximation, and it's much better somehow to get the robot to learn by itself by just trial and error and so much of manipulation right now, like grasping,” Kambhampati said. “So this is actually an interesting change in the research. Much more robotics work now is essentially focused on learning techniques and object manipulation.”

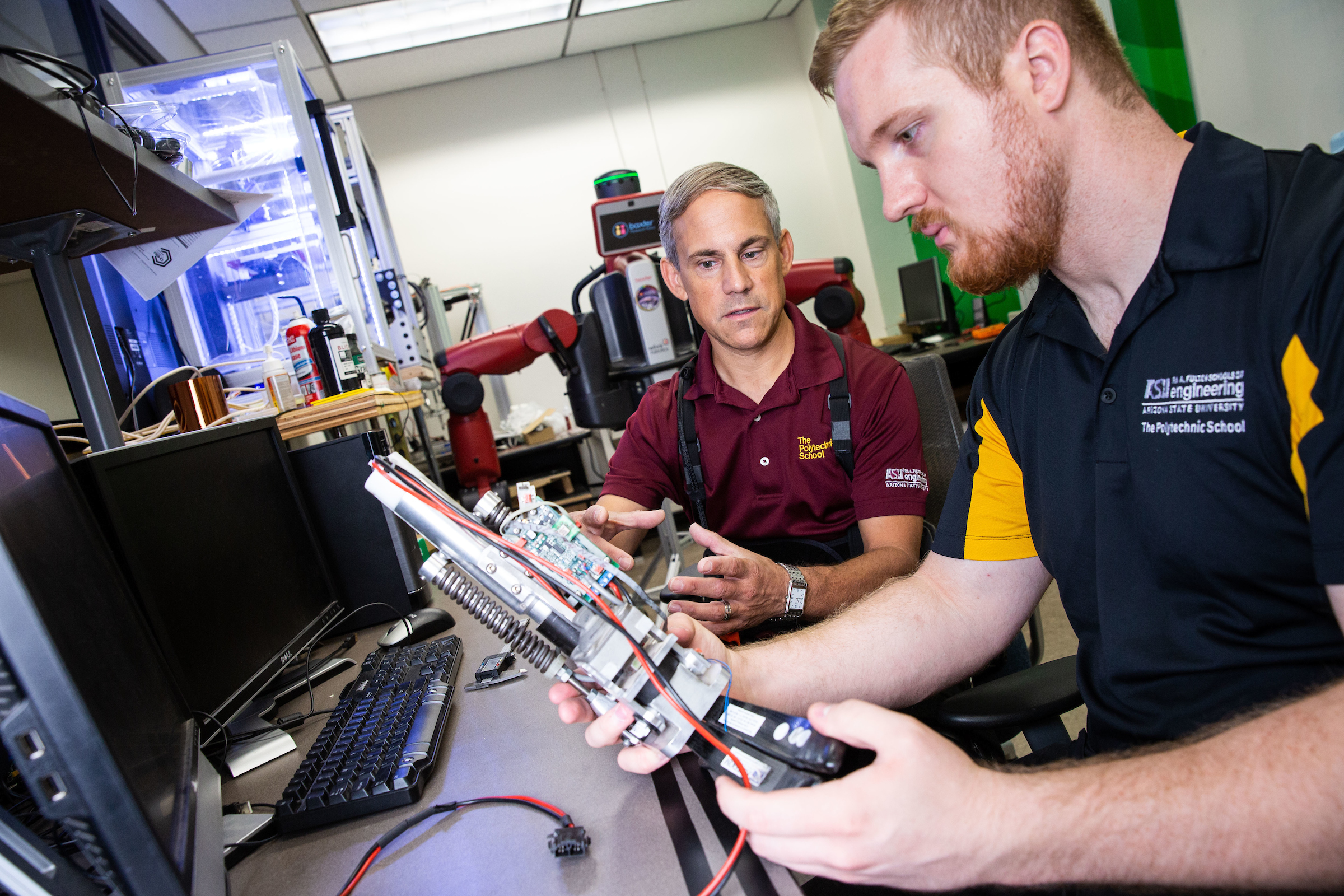

Professor Tom Sugar works with doctoral student Jason Olson and shows how the "Sparky" robotic ankle works on July 20, 2018. Sugar and his students work on robotics that enhance human movements and work as tools to help rehabilitation for those who have suffered stroke or injury. Photo by Deanna Dent/ASU Now

Enhancing people with robotics

Tom Sugar is a roboticist and director of the Human Machine Integration Lab, which works on robotic orthoses, prostheses and wearable robots for enhanced mobility. To answer your question, Sugar says no, there will never be an Iron Man suit; it would be too heavy and too expensive.

But the next 10 years will see a boom in human augmentation. Exoskeletons will assist warehouse workers.

“In my realm, we're trying to build robotic devices to assist people,” Sugar said. “In logistics, if you have to lift, or if you have to push your arms above your head, and you've got shoulder injury or neck injury or back injury, we're trying to build devices to make the work easier and less fatiguing.”

Sugar sees robots as tools. Horses made people travel farther and faster, then they were replaced by cars. Robotics is going to make life easier by assisting people with artificial intelligence. Picture an airplane mechanic working on an engine wearing something like Google glasses.

“It'll pop up information and tell you, ‘Hey, you know, if this is wrong, why don't you check these five things?’” Sugar said. “And then the human being can make an assessment on those things.”

Artificial intelligence can beat people in chess, but pair a human with AI and they’re both going to be better.

“Plus the human being can make smarter and better choices," Sugar said. "And I think that's the positive outcome. I think that we're going to see in the future.”

Looking ahead

What will future of robots be like – something that’s universally accessible to everyone, or something tailored to specific people or classes of user?

“I think what we might see is more task-specific rather than person-specific, because people have a huge variance in their limits, capabilities, preferences and things like that,” Srivastava said. “But there are fewer tasks where it's easy to conform a robot to a task. For instance, robot vacuum cleaners. They are not personalized, but they are specific to the task of vacuuming.”

One of the things the AI community has been intuitively doing is to make AI systems safe and efficient and easy to use. The missing element has been the operator class. Srivastava said systems that are easy to learn how to use are what need to be designed.

“Who is it easy to use for?” he asked. “You could perfectly reasonably say that an AI system is used in a very narrow situation. So the operators who are qualified to use it are only people with this level of certification. That's fine in some cases, but I think what we need to do is design systems that are not just easy to use, but easy to learn how to use. … What this would allow you to do is, if you have an AI system and an inexperienced user, then the AI system would be able to train the user on the fly in a way that it keeps the operations safe. So I think that is a good objective for us as we go forward.”

MORE

ASU on the cutting edge of robotics

Why seeing robots in pop culture is important

Top photo: The Kuka robotic arm goes through its paces at the Innovation Hub on the Polytechnic campus on May 17, 2018. Photo by Charlie Leight/ASU Now

More Science and technology

Swarm science: Oral bacteria move in waves to spread and survive

Swarming behaviors appear everywhere in nature — from schools of fish darting in synchrony to locusts sweeping across landscapes in coordinated waves. On winter evenings, just before dusk, hundreds…

Stuck at the airport and we love it #not

Airports don’t bring out the best in people.Ten years ago, Ashwin Rajadesingan was traveling and had that thought. Today, he is an assistant professor at the University of Texas at Austin, but back…

ASU in position to accelerate collaboration between space, semiconductor industries

More than 200 academic, business and government leaders in the space industry converged in Tempe March 19–20 for the third annual Arizona Space Summit, a statewide effort designed to elevate…