ASU engineers working on 'talking' cars

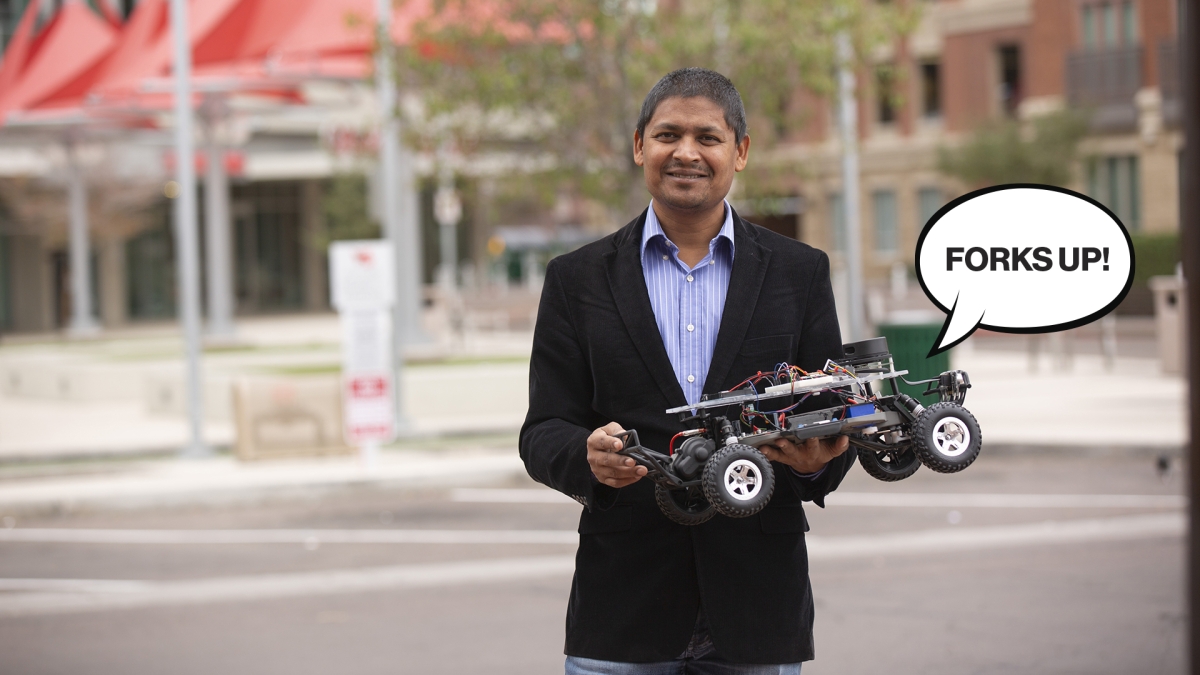

Aviral Shrivastava, a professor of computer science and engineering in the Ira A. Fulton Schools of Engineering at Arizona State University, poses with a one-tenth-scale autonomous vehicle model. Shrivastava has developed a proposal to allow self-driving cars to securely share the data they receive while driving with each other to make roads safer. Photo illustration by Erika Gronek/ASU

Lightning McQueen saves Radiator Springs. KITT from Knight Rider wooshes up the road with David Hasselhoff in the driver’s seat. Optimus Prime and his crew of Transformers keep on trucking.

The idea of a talking car — a friend who literally picks you up — looms large in the popular imagination.

Now, Aviral Shrivastava, a professor of computer science and engineering in the Ira A. Fulton Schools of Engineering at Arizona State University, has come up with a plan to help cars communicate — but there’s a catch.

The cars will only talk to each other.

Shrivastava, a faculty member in the School of Computing and Augmented Intelligence, part of the Fulton Schools, and his team of researchers in ASU’s Make Programming Simple Lab have been studying how to use state-of-the-art programming techniques to help autonomous vehicles, also known as self-driving cars, collect data and share the information they gather.

The team, which includes computer science doctoral student Edward Andert and computer science graduate student Francis Mendoza, began with a couple of simple questions: What if your car could see every other car on the road? And what if it could use the information it gathers about driving conditions to help other cars make better safety decisions?

“When cars drive, they need to find paths that are clear and free from obstructions,” Shrivastava says. “If there is a car driving in a lane next to you, you can only see that car. But the driver of the other car can see things you cannot. When autonomous cars share data, they can solve basic safety problems.”

Most cars on the road today can transmit some forms of data. Automotive navigation systems are increasingly common, and car manufacturers have created products like OnStar from General Motors that can access and send information such as vehicle speed, seat belt use and crash data to first responders in case of an emergency.

Plus, engineers are helping autonomous vehicles learn. Yezhou “YZ” Yang, a Fulton Schools associate professor of computer science and engineering, is at the forefront of the development of artificial intelligence systems for self-driving cars. His work in active perception involves the development of algorithms that collect and analyze complex datasets from vehicle sensors to help the cars make safer and more intelligent decisions while on the road.

Talk to the fender

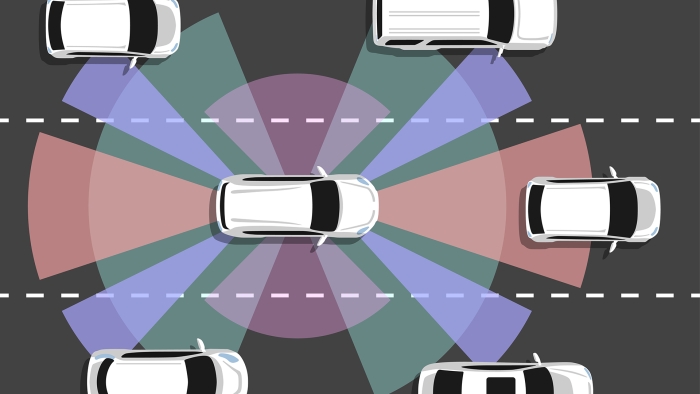

Shrivastava’s work would build on those important, existing systems: An autonomous vehicle collecting critical data through its various sensors and cameras, rapidly processing information gathered while driving. One car can then tell another what it detects. This is called cooperative perception.

“Cooperative perception allows autonomous vehicles to collectively gather and process data, leading to more efficient use of resources and optimization of driving behaviors such as lane changing, merging and route planning,” Yang says. “It also helps to avoid near-miss situations.”

But a significant new component of Shrivastava’s work is its focus on security. His team is proposing a system they have named CONClave.

If cars are going to communicate, it’s critical that the information they share is correct.

Historically, some makers of autonomous vehicles have kept them offline while driving to prevent being hacked. It’s a concern that is becoming more serious as cybercrime rises and hackers target automotive computers. CONClave seeks to address these problems using authentication and prevention.

First, a transmission from another car is authenticated using a security protocol, similar to a secret key, that is approved by the vehicle’s manufacturer and by governmental agencies.

Next, Shrivastava’s system protects against Byzantine attacks. In computer science, a Byzantine fault occurs when one piece of data or one part of a system does not agree with another. This classic game theory problem invites a thinker to contemplate how an army might operate if some of its generals were loyal and some were traitors — and inspires software engineers to find ways to deal with conflicting pieces of data.

To guard against a scenario in which a malicious signal is transmitted from one vehicle to another, an autonomous vehicle using CONClave will compare the data it receives from other cars with the feedback it is getting from its own cameras and sensors.

Finally, the CONClave software uses a scoring system to rapidly determine which information is the most trustworthy, much in the way that human beings would quickly compare their own observations to instructions being given by others. The result is that self-driving vehicles can share information and authenticate what they are receiving.

The cars can talk — but they can’t gossip.

The work has several important implications. First, CONClave can serve as a backup if an autonomous vehicle’s sensors malfunction or fail. During tests, it was also highly effective at detecting both malicious errors and software errors.

While the research focuses initially on self-driving cars, Shrivastava sees the potential to improve safety systems in cars with human drivers.

“It’s possible that some of these systems could be adapted to assist in-car computers in conventional vehicles, perhaps working with traffic light sensors or signal devices placed on street light fixtures,” he says.

Driving into the future

Shrivastava plans to take his ideas on the road.

His CONClave research paper has been accepted for presentation in June at the Design Automation Conference, or DAC, in San Francisco. The conference will be a critical chance to network with approximately 6,000 experts on trends and technologies in the electronics industry.

Shrivastava’s team will get to interface with attendees from more than 1,000 organizations, including senior executives from enterprise sectors and faculty from other universities.

“Conference opportunities such as this are important for researchers,” says Ross Maciejewski, director of the School of Computing and Augmented Intelligence. “Shrivastava’s efforts at DAC will provide great exposure for his ideas and create an opportunity to receive support from other experts.”

The researchers aim to have real cars that can communicate the way Shrivastava has designed, making streets safer, soon.

For now, talking vehicles like the iconic Transformer Bumblebee will continue to drive only through our dreams.

More Science and technology

ASU professor honored with prestigious award for being a cybersecurity trailblazer

At first, he thought it was a drill.On Sept. 11, 2001, Gail-Joon Ahn sat in a conference room in Fort Meade, Maryland. The cybersecurity researcher was part of a group that had been invited…

Training stellar students to secure semiconductors

In the wetlands of King’s Bay, Georgia, the sail of a nuclear-powered Trident II Submarine laden with sophisticated computer equipment juts out of the marshy waters. In a medical center, a cardiac…

ASU startup Crystal Sonic wins Natcast pitch competition

Crystal Sonic, an Arizona State University startup, won first place and $25,000 at the 2024 Natcast Startup Pitch Competition at the National Semiconductor Technology Center Symposium, or NSTC…