Professor argues for risk-innovation framework in responsible AI advancement

Stock photo

While the proliferation of artificial intelligence may feel like an isolated phenomenon, humans have had to confront disruptive technological changes in society before, and have successfully done so for thousands of years. However, those innovations always moved faster than the societal consequences — which is not a given with AI.

“What happens when you get to the point where the timescale under which consequences emerge is much smaller than the time scale it takes to innovate our way out of them?” Andrew Maynard asked the audience at a recent seminar hosted by Arizona State University’s Consortium for Science, Policy & Outcomes.

The seminar, “Responsible Artificial Intelligence: Policy Pathways to a Positive AI Future,” was delivered at ASU’s Barrett & O’Connor Washington Center to an in-person and live-streamed audience. In it, Maynard, a professor in ASU’s School for the Future of Innovation in Society and director of the Risk Innovation Lab, argued for the need for a new risk-management and innovation framework in response to accelerating developments in transformational AI.

Maynard, who writes regularly on AI and has testified about its ramifications before Congress, noted the rapid iteration of AI. Calling ChatGPT “the tip of a very large AI iceberg,” he noted that regulators internationally are looking at far larger, trainable “foundation models” that are applicable in many areas, as well as “frontier models,” which are foundation models with potentially disruptive powers.

Maynard touted the framework of an “advanced technology transition,” which is different from transitions spurred by past innovations, but solvable. Drawing on his own research, he highlighted nanotechnology as a field characterized by the broad-scale engagement of sectors such as academics, technologists and philosophers, and a framework for responsible innovation founded on anticipation, reflexivity, inclusion and responsiveness.

This framework-based approach is different, he noted, from the development of AI to date, in which computer scientists have played an outsized role without an adequate grasp of the social consequences of their actions, or the recent interest by policymakers in “responsible AI,” which is being defined in a different way than responsible innovation.

“We need a framework for thinking and guiding decisions which recognizes that complexity,” Maynard said.

From a risk-innovation lens, focusing on threats to existing and future values (or items and beliefs of importance), Maynard identified a series of “orphan risks” — social and ethical factors; unintended consequences of emerging technologies; and organizational and systemic issues — that go unaddressed because of their complexity.

In addition, Maynard emphasized the need for “agile regulation” that functions at the speed of sectoral innovation and incorporates learnings from continuously evolving technologies.

“We cannot stop the emergence of transformative AI,” Maynard said. “All we can do is steer it. … (W)e have to think about how we steer the inevitable towards outcomes that are desirable.”

To watch the full seminar, visit the Consortium for Science, Policy & Outcomes’ website.

More Science and technology

When facts aren’t enough

In the age of viral headlines and endless scrolling, misinformation travels faster than the truth. Even careful readers can be swayed by stories that sound factual but twist logic in subtle ways that…

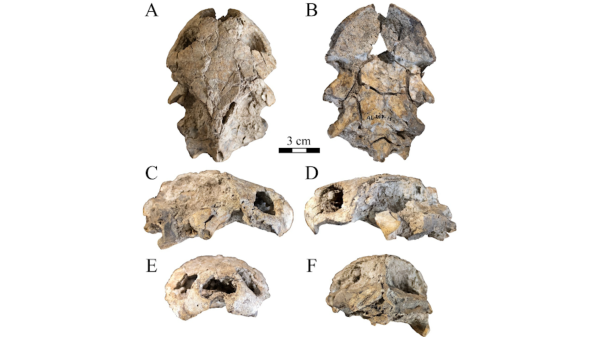

Scientists discover new turtle that lived alongside 'Lucy' species

Shell pieces and a rare skull of a 3-million-year-old freshwater turtle are providing scientists at Arizona State University with new insight into what the environment was like when Australopithecus…

ASU named one of the world’s top universities for interdisciplinary science

Arizona State University has an ambitious goal: to become the world’s leading global center for interdisciplinary research, discovery and development by 2030.This week, the university moved…