When we think about robots, it’s usually in the context of their relationships with humans. Some are friendly, like Rosie the Robot from “The Jetsons” — a bizarre juxtaposition of futuristic technology and 1950s gender roles. Then there’s R2D2, whose impressive skills are punctuated with adorable beeps and chirps.

Some robots are destructive — like the Terminator, programmed to go back in time to kill. Or the Cylons from “Battlestar Galactica,” cybernetic beings that try to wipe out the entire human race.

It makes sense to consider robots in relation to humans, because robots are created by people, for people. Yet outside of movies and books, it’s easy to get caught up in advancing the technological prowess of robots without thinking about the human element. We need them to work with us, not against us, or even simply apart from us.

“Often when you start thinking about these technologies, the human kind of gets lost in the shuffle,” said Nancy Cooke, a professor of human systems engineering at Arizona State University’s Polytechnic School. “People need to be able to coexist with this technology.”

Helping people and technology collaborate well is no easy feat. That’s why researchers across ASU are teaming up to help people, robots and artificial intelligence work together seamlessly.

“One of the first things you worry about is team composition. I think it’s an important question to consider who’s doing what on the team,” Cooke said. “We need to make sure the robots are doing what they’re best suited for and the humans are doing what they’re best suited for. You have (robots) doing tasks that either the human doesn’t want to do, it’s too dangerous for the human to do, or that the AI or robot is more capable of doing.”

Cooke is a cognitive psychologist by training. She has spent years working to understand human teamwork and decision-making. Now she applies this expertise to human-technology teams as director of ASU’s Center for Human, Artificial Intelligence, and Robot Teaming (CHART), a unit of the Global Security Initiative.

CHART is providing much-needed research on coordinating teams of humans and synthetic agents. Their work involves everything from how these teams communicate verbally and nonverbally, to how to coordinate swarms of robots, to the legal and ethical implications of increasingly autonomous technology. To accomplish this, robotics engineers and computer scientists work closely with researchers from social sciences, law and even the arts.

What can human-robot teams do? There are lots of applications. Scientists can explore other planets through rovers that don’t need air and water to survive. Swarms of drones could carry out search-and-rescue missions in dangerous locations. Robots and artificial intelligence (AI) can assist human employees in automated warehouses, on construction sites or even in surgical suites.

Teaching teamwork

“One of the key aspects of being on a team is interacting with team members, and a lot of that on human teams happens by communicating in natural language, which is a bit of a sticking point for AI and robots,” Cooke said.

She is working on a study called the “synthetic teammate project,” in which AI (the synthetic teammate) works with two people to fly an unmanned aerial vehicle. The AI is the pilot, while the people serve as a sensor operator and navigator.

The AI, developed by the Air Force Research Laboratory, communicates with the people via text chat.

“So far, the agent is doing better than I ever thought it would,” Cooke said. “The team can function pretty well with the agent as long as nothing goes wrong. As soon as things get tough or the team has to be a little adaptive, things start falling apart, because the agent isn’t a very good team member.”

Why not? For one thing, the AI doesn’t anticipate its teammates’ needs the way humans do. As a result, it doesn’t provide critical information until asked — it doesn’t give a “heads up.”

“The whole team kind of falls apart,” Cooke said. “The humans say, ‘OK, you aren’t going to give me any information proactively, I’m not going to give you any either.’ It’s everybody for themselves.”

A need for information is not the only thing people figure out intuitively. Imagine that you are encountering a new person. The person approaches you, looks you in the eye and reaches out his right hand.

Your brain interprets these actions as “handshake,” and you reach out your own arm in response. You do this without even thinking about it, but could you teach a robot to do it?

Heni Ben Amor, an assistant professor in the School of Computing, Informatics, and Decision Systems Engineering, is trying to do just that. He teaches robots how to interact with people physically by using machine learning. Machine learning is how computers and AI learn from data without being programmed.

“It’s really about understanding the other and their needs, maybe even before they are uttering them. For example, if my coworker needs a screwdriver, then I would probably pre-emptively pick one up and hand it over, especially if I see that it’s out of their range,” Ben Amor said.

His team uses motion-capture cameras, like the one in an Xbox Kinect. It’s an inexpensive way to let the robot “see” the world. The robot observes two humans interacting with each other, playing table tennis or assembling a set of shelves, for instance. From this, it learns how to take on the role of one of the people — returning a serve or handing a screw to its partner.

“The interesting part is that no programming was involved in this,” Ben Amor noted. “All of it came from the data. If we wanted the robot to do something else, the only thing we need to do is go in there again and demonstrate something else. Instead of getting a PhD in robotics and learning programming, you can just show the movement and teach the robot.”

Designing droids

Lance Gharavi also works with the physical interactions between humans and robots, but he is not a roboticist. He is an experimental artist and professor in the School of Film, Dance and Theatre. He has been integrating live performance and digital technologies since the early '90s.

Several years ago, Gharavi was asked to create a performance with a robot in collaboration with Srikanth Saripalli, a former professor in ASU’s School of Earth and Space Exploration.

“I think one of my first questions was, ‘Can he juggle?’ And Sri said, ‘No.’ And I said, ‘Can he fail to juggle?’ And Sri said, ‘Yes, spectacularly!’ I said, ‘I can work with that,’” Gharavi said.

Gharavi’s team put together a piece called “YOU n.0,” which premiered at ASU’s Emerge event. Afterward, Saripalli told Gharavi that the collaboration had advanced his research and asked to continue the partnership.

“It was primarily about trying to understand the control structures and mechanisms for the Baxter robot platform,” explained Gharavi, referring to an industrial robot with an animated face created by Rethink Robotics. “They weren’t really sure how to work with it. My team created a means of interfacing with the robot so that we could operate it in a kind of improvisational environment and make it function.”

For example, the team created a system called “the mirror,” in which the robot would face a person and mirror that person’s movements.

“What we were interested in doing is creating a system where the robot could move like you, but not just mimicking you. So that you could potentially use the robot as, say, a dance partner,” Gharavi said.

His goal is to give robots some of the “movement grammar” that people have. Or to put it more simply, to make a robot move less robotically.

“If we’re looking forward to a day when robots are ubiquitous, and interacting with robots is a common experience for us, what do we want those interactions to look like? That takes a degree of design,” he explained.

Such a future may also require people to adjust their perceptions and attitudes. Cooke’s team conducted an experiment in which they replaced the AI pilot with a person but told the other two teammates that they were working with a synthetic agent. She says they treated the pilot differently, giving more commands with less polite interaction.

“Everything we saw from how they were interacting indicated that they weren’t really ready to be on a team with a synthetic agent. They still wanted to control the computer, not work with it,” Cooke said.

In a twist on the experiment, the researchers inserted a human teamwork expert into the AI pilot role. This person subtly guided the other humans on the team, including asking for information if it wasn’t coming in at the right time. The collaboration was much more effective.

Cooke’s team continues to work with the Air Force Research Laboratory to improve the synthetic teammate and test it in increasingly difficult conditions. They want to explore everything from how a breakdown in comprehension affects team trust to what happens if the AI teammate gets hacked.

Assisting autonomy

Cooke is also collaborating with Spring Berman, associate director of CHART, on a small-scale autonomous vehicle test bed. As driverless-car technology advances, these vehicles will have to safely share the road with each other, human-driven cars, pedestrians and cyclists.

Some of the miniature robotic “cars” in the test bed will be remotely controlled by people, who will have a first-person view of what’s happening as if they are really driving a car. Other vehicles will operate autonomously. The team wants to explore a wide range of potential real-world scenarios.

“Autonomous vehicles will generally obey all the rules. Humans are a little more flexible. There’s also the issue of how do humans react to something that’s autonomous versus human-driven. How much do they trust the autonomous vehicle, and how does that vary across ages?” Berman asked.

She is an associate professor in the School for Engineering of Matter, Transport and Energy who primarily studies robot swarms. She says that CHART allows her to consider her technical work in a broader context.

“You’re so focused on proving that the swarm is going to do something that you design it to do. In reality, the robots will be operating in an uncertain environment that’s changing. There are things you can’t control, and that’s very different from a simulation or lab environment. That’s been eye-opening for me, because you’re so used to testing robot swarms in controlled scenarios. And then you think, ‘Well, this might actually be operating out in the world. How will we keep people safe? How are they going to benefit from this? What are the legal issues?’” Berman asked.

According to Ben Amor, robots still have a long way to go before they operate truly autonomously in a safe way. Currently, machine learning is mostly used in domains where making a mistake is not costly or dangerous. An example is a website that recommends products to buy based on your past choices.

Ben Amor notes that robots aren’t good at executing a series of interdependent actions yet. For instance, if a car turns left, it is now on a new street. Any decision it makes now will be dependent on that last decision.

“If you’re learning, that means you have to explore new things. We need to allow the robot to try things it’s never done before. But if you do that, then you also run the risk of doing things that adversely affect the human,” he said. “That’s probably the biggest problem we have right now in robotics.”

In his research, he is exploring how to ensure that this continual learning process is actually safe before conducting those kinds of experiments.

What it means to be human

If the idea of robots continually learning and acting autonomously is a bit unnerving to you, you are not alone. That is why, as ASU researchers work on perfecting the technology of robots and AI, they collaborate with humanities scholars and artists like Gharavi.

“We can’t go blithely forward, just making and creating, without giving some thought to the implications and ramifications of what we’re doing,” Gharavi said. “That’s part of what humanities people do, is think about these sorts of things.”

Gharavi is collaborating with Cooke and Berman on the autonomous vehicle test bed. His role is to create the environment that the cars will operate in, a city for robots that he calls “Robotopolis.”

“What I and some designers I’m working with are creating will be something on the order of a large art installation, that will at once be a laboratory for research and experiments in robotics and also an artistic experience for people that come into the space — an occasion for meditation on technology and the future, and also history,” he explained.

Gharavi says what fascinates him about working with robotics and AI is the way it forces us to explore what it really means to be human.

“We understand robots as kind of the ultimate ‘other.’ Whatever they are, they are the opposite of human. They are objects that specifically lack those qualities that make us as humans special. At least that’s the way we have framed them,” he said.

“Through that juxtaposition, it’s a way of engaging with what it means to be alive, what it means to be sentient, what it means to be human. What it means to be human has always been up for debate and negotiation. Significant portions of the population of this country were once not considered humans. So the meaning of human has changed historically,” he continued.

Scholars in the arts, humanities and social sciences have a lot to offer scientists and engineers studying robotics. Cooke says ASU’s interdisciplinary approach to robotics and AI sets the university apart from others. It also brings its own set of challenges, just like human-robot collaboration.

“Again you have the communication problem. The language that people who do robotics speak is not necessarily the same kind of language that we speak when we’re talking about human interaction with the robots,” noted Cooke, who chaired a committee on the effectiveness of team science for a National Academies study.

“Multidisciplinary team science can be really challenging, but it also can be really rewarding,” she said about her work with CHART. “Now I feel like I’m living the dream.”

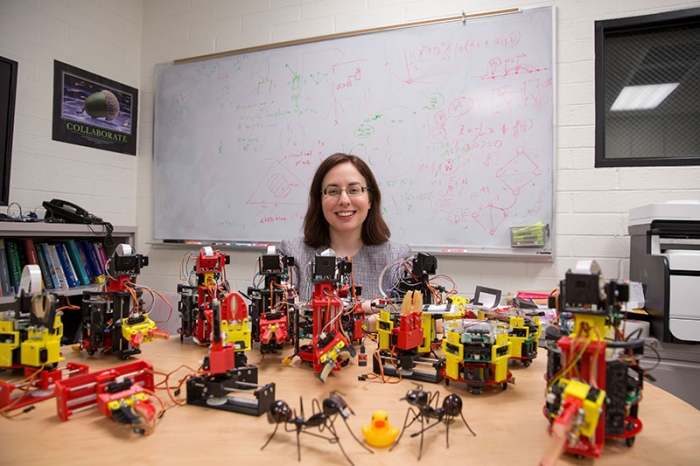

Written by Diane Boudreau. Top photo: A child interacts with a Baxter robot at ASU's Emerge festival. Photo by Tim Trumble

The Polytechnic School; the School of Computing, Informatics, and Decision Systems Engineering; and the School for Engineering of Matter, Transport and Energy are among the six schools in the Ira A. Fulton Schools of Engineering. The School of Film, Dance and Theatre is a unit of the Herberger Institute for Design and the Arts. Human-AI-robot teaming projects at ASU are funded by the National Science Foundation, NASA, Office of Naval Research, Defense Advanced Research Projects Agency, Intel, Honda, Toyota and SRP.

More Science and technology

Research expenditures ranking underscores ASU’s dramatic growth in high-impact science

Arizona State University has surpassed $1 billion in annual research funding for the first time, placing the university among the top 4% of research institutions nationwide, according to the latest…

Programming to predict the unpredictable

As the natural world rapidly changes, humanity relies on having reliable, accurate predictions of its behavior to minimize harmful impacts on society and the ecosystems that sustain it.Ecosystems of…

Findings on adenoviruses in baby gelada monkeys provide a window into our own cold and flu season

If you have young kids or spend time around day care centers, you know the drill: Someone gets a cold, and soon the whole group is sniffling and sneezing. Now imagine that same pattern playing out in…