ASU team unleashes AI-powered robodog to help humanity

Quinn the dalmatian, a staff member's pet, pays a quick visit to the Laboratory for Learning Evaluation and Naturalization of Systems, or LENS Lab, where she eyes the Unitree Go2 robotic dog uneasily. But Quinn can relax. Researchers in the School of Computing and Augmented Intelligence, part of the Ira A. Fulton Schools of Engineering at Arizona State University, are busy creating new artificial intelligence, or AI, systems that will allow robotic dogs to tackle the most dangerous search-and-rescue missions. All dogs in the LENS Lab have circuits and gears. No real animals are used in the research. Photo by Erika Gronek/ASU

While the average pampered pup at home may lounge on the couch and demand belly rubs, the robotic dogs being created at Arizona State University are stepping up to take on some of the world’s most dangerous tasks.

Meet the Unitree Go2. This agile, quadrupedal robot is designed for more than simply fetching sticks. It’s equipped with advanced artificial intelligence cameras, LiDAR and a voice interface, and it’s learning how to assist with everything from search-and-rescue missions to guiding the visually impaired through complex environments.

Ransalu Senanayake, an assistant professor of computer science and engineering in the School of Computing and Augmented Intelligence, part of the Ira A. Fulton Schools of Engineering at ASU, is leading the research team out to prove that some heroes have both circuits and tails.

LENS Lab unleashes the future of robotics

Senanayake is a roboticist who has founded the Laboratory for Learning Evaluation and Naturalization of Systems, or LENS Lab. He has a vision to enhance human well-being by developing robots with intelligence that can assist in real-world scenarios.

“We’re not just writing code for robots,” he says. “We’re creating tools to solve problems that matter, like saving lives in dangerous environments and making the world more accessible.”

The LENS Lab team is pushing the boundaries of robotics by incorporating AI models that enable the robot to understand and adapt to its environment, making it ready for real-world applications.

“AI breakthroughs are finally turning robots into a feasible, ubiquitous reality,” Senanayake says. “We are teaching our robots to see, hear and move in complex environments, making them useful in a variety of situations.”

Sit, stay, innovate

The team is working on multiple projects using the Unitree Go2 robotic dog. One of the lab’s most exciting projects comes from Eren Sadıkoğlu, a student pursuing a master’s degree in robotics and autonomous systems and developing vision and language-guided navigation tools to help the robotic dog to perform search-and-rescue missions.

Sadıkoğlu, who says he has been passionate about robotics since middle school, is using reinforcement learning to teach the robot how to move in challenging environments, such as after a natural disaster like an earthquake.

“The robots need to jump over obstacles, duck under things and do some acrobatic movements,” Sadıkoğlu says. “It’s not just about moving from point A to point B. It’s about moving safely and strategically through difficult terrain.”

Thanks to the robot’s AI-powered advanced sensors, such as RGB-depth cameras and touch sensors on the robot’s feet, it can adapt to and navigate the unpredictable conditions within disaster zones.

“This research is about making robots that can go where humans can’t, keeping rescue teams safe while saving lives,” Sadıkoğlu says.

4 paws for a good cause

Fulton Schools undergraduate student Riana Chatterjee, in the computer science program, is also contributing to the future of robotics with her project aimed at assisting the visually impaired. Chatterjee is developing AI-powered algorithms so the robot dog can guide individuals safely through both indoor and outdoor spaces.

“My project is about combining deep learning technologies to enable the robot to understand its surroundings and communicate that to a visually impaired person,” Chatterjee says.

Why this research matters

Research is the invisible hand that powers America’s progress. It unlocks discoveries and creates opportunity. It develops new technologies and new ways of doing things.

Learn more about ASU discoveries that are contributing to changing the world and making America the world’s leading economic power at researchmatters.asu.edu.

Chatterjee’s work blends emerging AI technologies. She is using You Only Look Once, or YOLO, a computer vision model that enables the robot to quickly recognize and classify objects in its environment, distinguishing between a person, a wall or an obstacle. She is also tapping into transformer-based monocular depth estimation, making the robot capable of gauging the distance of objects and navigating safely.

Finally, the project employs vision language models, or VLMs, so the robot can interpret what it sees and respond using language to communicate with the visually impaired person by describing surroundings or offering guidance.

Chatterjee’s work could have a huge impact. Future robots can act as a guide, identifying obstacles and walkable areas, serving as service dogs in environments where real animals are impractical.

“For me, robotics is about making life easier for people with impairments. It’s fascinating to think about how AI can change the lives of those who need it the most,” she says.

Unleashing the future of robotics

Senanayake believes that robotics and AI have the potential to transform our world.

He envisions robots being a regular part of our daily lives, helping around the house, assisting in critical missions and opening up new possibilities for accessibility.

“The future of robotics is exciting, and I hope that with our research, we’ll be able to bring these technologies into the homes and communities that need them most,” he says.

As AI and robotics continue to evolve, Ross Maciejewski, director of the School of Computing and Augmented Intelligence, emphasizes the school’s commitment to fostering innovation and providing students with a rigorous, project-driven curriculum that prepares them for the rapidly changing high-tech landscape.

“Our goal is to equip students with both the theoretical foundations and practical skills needed to tackle the challenges of tomorrow,” Maciejewski says. “By blending AI with robotics, we are not only expanding the capabilities of technology but also creating future leaders who will bring transformative solutions to critical issues.”

Who knows? Your next helpful pooch may just be a robot.

WiFido? Spark-E? Help name ASU's robotic best friend!

Help choose the perfect name for ASU’s four-legged AI companion! Vote over in the Instagram stories at ASUEngineering.

More Science and technology

New research by ASU paleoanthropologists: 2 ancient human ancestors were neighbors

In 2009, scientists found eight bones from the foot of an ancient human ancestor within layers of million-year-old sediment in the Afar Rift in Ethiopia. The team, led by Arizona State University…

When facts aren’t enough

In the age of viral headlines and endless scrolling, misinformation travels faster than the truth. Even careful readers can be swayed by stories that sound factual but twist logic in subtle ways that…

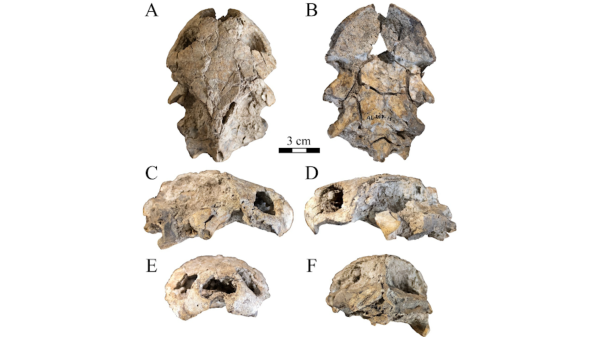

Scientists discover new turtle that lived alongside 'Lucy' species

Shell pieces and a rare skull of a 3-million-year-old freshwater turtle are providing scientists at Arizona State University with new insight into what the environment was like when Australopithecus…