A rocket launches into space. It escapes the Earth’s atmosphere and falls away from the payload. The nose cone peels away. The main payload, a 15,000-pound satellite, deploys.

In the space between the satellite and the rocket, a big ring holds smaller payloads. They’re components for something larger — a solar array or a radio antenna perhaps. One by one they’re ejected off the ring into space. Sometime later, another rocket delivers a set of cubesats — spacecraft the size of a large shoebox.

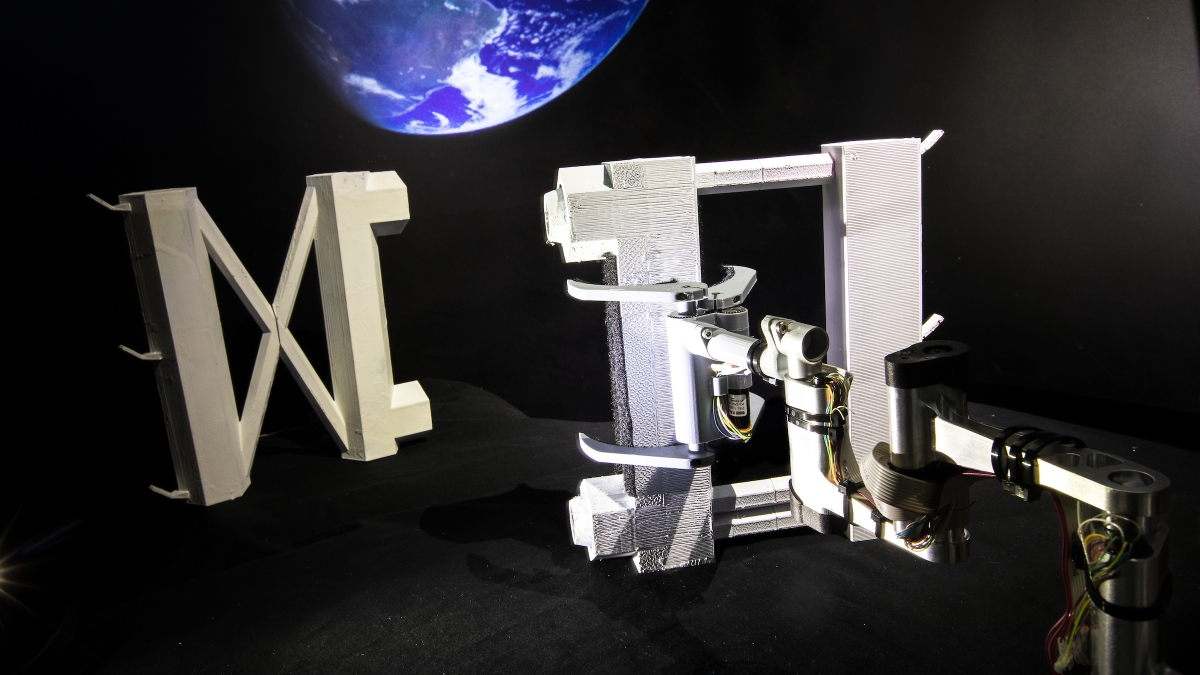

These cubesats have small arms, about the length of a 6-year-old child’s. They orient themselves in space, spot the floating components and begin assembling them.

The arm is a creation from NASA’s Jet Propulsion Laboratory, and the arm’s task of locating and reaching out for components is being developed in a joint project between JPL and Arizona State University.

The cubesats have global positioning systems, star trackers (optical devices that determine position and attitude by measuring the positions of stars) and cameras to locate the components they will assemble.

The cameras will compute the position and three-dimensional orientation of the components floating nearby.

“Once the robot has that information, it knows how to move its arm to pick up the part at the right place,” said Renaud Detry, a robotics technologist with JPL’s computer vision group. “For us, it seems so natural and intuitive. When we see something, we just reach out for it. We don’t even realize the complexity of the problem our brain is solving. When you try to implement that behavior on a robot, it’s extremely complicated.”

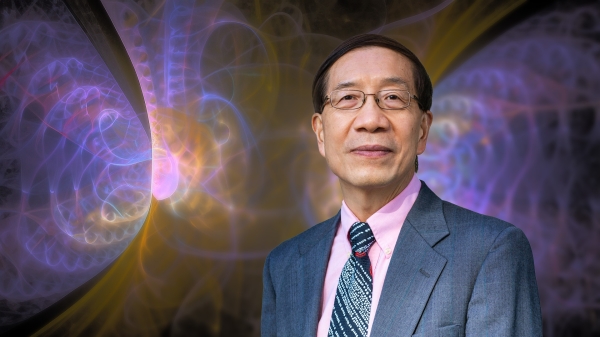

“Effectively satellites in space would not just be passive observers any more,” said Heni Ben Amor, an assistant professor in ASU’s School of Computing, Informatics, and Decision Systems Engineering. “They could engage in all sorts of tasks.”

Couldn’t astronauts assemble things in space? Something big — sure. A lot of little things? That’s a job for a robot. How about remote control by someone in a ground station? Ground control is expensive. And the time delay from Earth gets longer and longer the farther away from the planet you are.

“For a simple task like moving a part from A to B, (robots) are probably more reliable than humans are at this point,” Detry said.

Building this is anything but simple. The object of the project is to prove all of the artificial intelligence and robotics technology can fit into a tightly constrained, energy-efficient form.

“We saw this idea where we can do all of these crazy things of detecting an object, going and grasping it, and assembling it into another object on relatively low-compute hardware,” Ben Amor said. “You have problems that cover the whole complexity range in space. You have problems that are aligned with the complexity of assembling a car on Earth, where you have a robot that executes the same motion again and again. It doesn’t need a lot of intelligence. That requires us to have an extremely good knowledge of the place where the different parts are located.”

What Ben Amor, Detry and two students — Shubham Sonawani and Siva Kailas — are working on is, according to the project abstract, “a rendezvous and proximity operations software package that works within the avionics and power constraints of a CubeSat form factor (to) leverage state-of-the-art machine vision, motion planning and motor control.”

Normally you would need a desktop or larger computer to do the computation for a robot like this. That’s not possible in space. It also has to be radiation-hardened.

“Your computer at home is probably much more capable than many of the computers in space,” Ben Amor said. The team cracked that problem with a small and cheap off-the-shelf model used in engineering education.

“The idea is to use real-time computer vision on low-compute hardware to localize objects,” he said.

The arm was developed by four technologists at JPL: Rudranarayan Mukherjee, Ryan McCormick, Spencer Backus and Kris Wehage.

The team has been working on the project for almost a year. It will be presented in a November conference at JPL. The project is funded by JPL's Strategic University Research Partnership program.

Top photo: A mockup of a cubesat robotic arm developed by the Jet Propulsion Laboratory reaching for previously launched components. The arm's controlling "brains" are being developed at ASU's School of Computing, Informatics, and Decision Systems Engineering, led by Assistant Professor Heni Ben Amor. The arm will be attached to the cubesats and will locate and assemble components in space. Photo by Charlie Leight/ASU Now

More Science and technology

Extreme HGTV: Students to learn how to design habitats for living, working in space

Architecture students at Arizona State University already learn how to design spaces for many kinds of environments, and now they can tackle one of the biggest habitat challenges — space architecture…

Human brains teach AI new skills

Artificial intelligence, or AI, is rapidly advancing, but it hasn’t yet outpaced human intelligence. Our brains’ capacity for adaptability and imagination has allowed us to overcome challenges and…

Doctoral students cruise into roles as computer engineering innovators

Raha Moraffah is grateful for her experiences as a doctoral student in the School of Computing and Augmented Intelligence, part of the Ira A. Fulton Schools of Engineering at Arizona State University…