Independent living

The Low Vision Food Management app was developed by computer engineering doctoral student and IGERT Fellow Bijan Fakhri, and computer science graduate students Jashmi Lagisetty and Elizabeth Lee to help their client manage food inventory. Photo by Pete Zrioka/ASU

Jordan Rodriguez’s preferred mode of transportation around campus is walking, just like thousands of other Arizona State University students. But sometimes Rodriguez could use a little help getting his bearings.

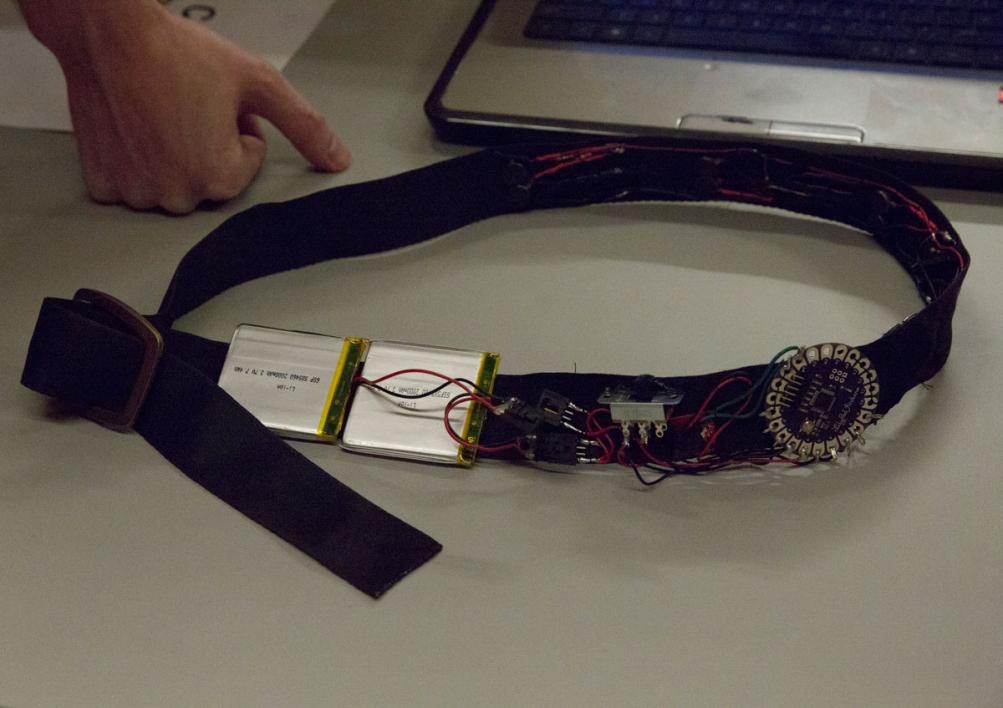

The civil, environmental and sustainable engineering graduate student, who is legally blind, is getting help from computer science and software engineering students in the form of a belt that uses haptic feedback to indicate which direction is north.

This is just one of many projects students from computer science, computer engineering and software engineering, among other disciplines, are working on in CSE 494/591: Assistive Technologies, an Integrative Graduate Education and Research Traineeship (IGERT) Program course led by computer science assistant research professor Troy McDaniel. Through this class, McDaniel is getting students involved in directly helping members of the community by making independent living easier.

“I invite clients — individuals with disabilities, disability specialists, clinical partners and collaborators — to propose projects that address unsolved, real-world needs of those with sensory, cognitive and/or physical impairments,” McDaniel said. “Clients put together a description of their proposed semester-long, team-based project, which I then present to the students during the first day of class.”

Clicking a mouse, skimming the names of food items in the pantry, and knowing what emotion your conversation partner is expressing are just some of the proposed projects that can be made possible with an interdisciplinary focus.

This is the second semester McDaniel has taught the Assistive Technologies class, but he has more than a decade of experience working on assistive, rehabilitative and health-care applications in the Arizona State University Center for Cognitive Ubiquitous Computing (CUbiC), founded and directed by Sethuraman “Panch” Panchanathan.

CUbiC research encompasses sensing and processing, recognition and learning, and interaction and delivery solutions that are made possible through signal processing, computer vision, pattern recognition, machine learning, human-computer interaction and haptics. Students in McDaniel’s class solved similar problems with these technologies in their client-driven projects.

Employing other senses to convey information

Haptic technology uses the sense of touch to convey information, which can be helpful when visual cues are unavailable.

Graduate software engineering student Dhanya Jacob and computer science students Dylan Ryland, Alejandra Torres used vibrating pancake motors evenly spaced around a belt to help their client, Rodriguez, navigate while walking. The Haptic Compass Belt uses a digital compass board and an Arduino to determine which direction is north and vibrate the corresponding motor to indicate its direction to the wearer.

Enhancing senses to overcome difficulties

Computer engineering can also help people with low vision to enhance what they have difficulties seeing.

Computer engineering doctoral student and IGERT Fellow Bijan Fakhri and computer science graduate students Jashmi Lagisetty and Elizabeth Lee created the Low Vision Food Management app to remove a hassle of independent living for people with low vision. The app can perform tasks such as reading labels on containers and keeping track of food stocks in a pantry. Users label shelves with X and Y coordinates and sections labeled A, B, C and so on and enter in the app where they put an item. Later, when they need to take inventory, it can tell them what they have and where to find it in a cupboard.

When individuals with low vision or other visual impairments need more than just magnification for visual identification, software engineering graduate student Aditya Narasimhamurthy and computer science graduate students Jose Eusebio, Krishnakant Mishra and Namratha Putta created the QwikEyes Video Calling Assistant, a way for people to call a service and share live video from a smartphone camera to have an assistant help with a variety of tasks from reading a label to finding a small object to navigating. QwikEyes was proposed by client and ASU engineering student Bryan Duarte, who is legally blind, to form the basis of his start-up company under the same name. Bryan just completed his bachelor’s degree in software engineering and is continuing on to ASU’s computer science doctorate program.

Another team worked on a project that helps others understand a sensory impairment — in this case, hearing loss. Computer science undergraduate students Jeremy Ruano and Leonardo Andrade were tasked with creating a hearing loss simulator. Their app applied a filter via signal processing technology to simulate mild, moderate and severe hearing loss in several audio clips, which included music and conversation snippets. They also created a filter that simulated their client’s specific levels of hearing loss at different frequencies.

Reading and notetaking can pose challenges to a wide variety of people, from individuals with low vision to others with reading disabilities, such as dyslexia, and text-to-speech/speech-to-text can make this task more manageable. Computer science doctoral student Shang Wang and computer science graduate students Kulvir Gahlawat and Deepthi Pothireddy created the Audio Reader/Note Taker, an iPad-based reading and notetaking app that uses text-to-speech technology to read book content to users, and speech recognition that allows users to dictate notes.

Improving physical abilities with the help of computing

Clicking a mouse is a no-brainer for many, but extra help in the form of a neural interface can help people who have ALS, cerebral palsy and other conditions that can create physical limitations. Computer science graduate student Vigneshwer Vaidyanathan, Human and Social Dimensions of Science and Technology program doctoral student and IGERT Fellow Denise Baker, and computer science doctoral students Anish Pradhan and IGERT Fellow Corey Heath created a method of performing a Neural-Controlled Mouse Click using a neural interface that enables people to use a specific thought to signal they want to click the mouse, in this case, the visual of a plus (+) sign. The neural interface headset, a commercial product sold as an EEG headset, looks for an aggregate of four types of brainwaves measured while the user thinks about the plus sign to create the signature that will trigger a mouse click.

For helping people recover from physical injury, physical therapists want to monitor motions of their clients after assigning specific exercises. Computer science undergraduate student Sanket Dhamala, computer science master's student Arun Scaria, and computer science doctoral student and IGERT associate Meredith Kay Moore created Shift, a balance training app that can be used by physical therapists and their clients to hold those clients accountable for doing assigned exercises — and ensure they’re doing them correctly. For example, in a clock exercise where the user tilts his or her body in the 12 directions of a clock face, the user holds the smartphone with the app installed and leans in a given direction. The smartphone’s sensors track body movements and displays them on the app.

Improving cognitive skills for physical actions

Other individuals need help understanding their own and others’ physical expressions. Doctorate student in the Human and Social Dimensions of Science and Technology program and IGERT Fellow Shane Kula, graduate student James Kieley, and computer science doctoral student Prajwal Paudyal created the Body Language Mirror, a system that uses a Kinect sensor and the Unity game engine to read body language and identify the emotion being displayed to aid people who may not be aware of emotional body language they or others are expressing.

Delivering the prototypes

For most client proposals, students completed the project to a point where they had a working demo they showed to clients and the general public as part of the Assistive Technologies Demo in late April.

McDaniel was impressed that students worked hard to make helpful technologies to meet their clients’ specific needs.

“I was surprised that many teams went above and beyond project assignments, implementing additional features asked for by clients to make sure that the final prototype was exactly what the client wanted,” McDaniel said.

After the end of the class they’re encouraged to deliver the prototype to the client.

“I follow up with students after finals and keep in contact with clients to make sure they receive the prototypes,” McDaniel said.

Continuing development for increased usability

Though prototypes are being delivered, Shift and the Body Language Mirror will continue to be developed through student engagement.

Many functions have already been added to Shift, including uploading videos, push notifications and downloading data to a remote database so physical therapists can track progress. But right now the app must be run on a computer, and the phone must be connected via USB to be displayed. The team would like to incorporate Bluetooth or other wireless technology so the user isn’t tethered to a computer, incorporate tactile or audio feedback when the correct balance has been achieved and conduct user studies to determine usability of all ages and abilities.

The Body Language Mirror team finished their first stage of development, but they were unable to get applicable test subjects to conduct experiments, so they would like to find subjects to begin testing. The team also wants to be able to record and replay reactions. Their ultimate goal is to create a system that can rewire the mirror neuron system in the brain so test subjects would become aware of what their emotional body language looks like. Due to the brain’s plasticity, scientists believe it may be possible to train the mirror neuron system.

Creating new computing technologies that can help the world

While these projects targeted the explicit needs expressed by clients, most of whom have disabilities, the progress students achieved toward developing computing technologies are applicable to the implicit needs of the broader population. For example, we can all use help with taking notes, navigating new environments or adhering to routine exercise.

More Science and technology

Cracking the code of online computer science clubs

Experts believe that involvement in college clubs and organizations increases student retention and helps learners build valuable…

Consortium for Science, Policy & Outcomes celebrates 25 years

For Arizona State University's Consortium for Science, Policy & Outcomes (CSPO), recognizing the past is just as important as…

Hacking satellites to fix our oceans and shoot for the stars

By Preesha KumarFrom memory foam mattresses to the camera and GPS navigation on our phones, technology that was developed for…